System design refers to the process of conceptualizing and structuring a software system to address specific requirements. It involves identifying the system's components, their relationships, and interactions, and designing their interfaces. System design encompasses architectural decisions, data flow, communication protocols, algorithms, and storage considerations. The goal is to create a robust, scalable, and efficient system that meets functional and non-functional requirements such as performance, reliability, and security. It requires knowledge of software engineering principles, design patterns, and trade-offs. System design is crucial in building complex software applications that effectively solve real-world problems.

At the core of system design is the identification and understanding of the problem domain. This involves analyzing user requirements, constraints, and objectives. Once the requirements are clear, system designers work on defining the system's structure and behavior.

Architectural decisions play a vital role in system design. Designers select appropriate architectural patterns and styles that determine how the components will interact and communicate. They consider factors like modularity, scalability, performance, and extensibility.

The design process includes defining data flow, storage mechanisms, and communication protocols. Designers choose suitable algorithms, data structures, and database models to optimize system performance and ensure proper data management.

During system design, non-functional requirements are also addressed. These include reliability, availability, security, and usability. Designers incorporate mechanisms for fault tolerance, data backup, access control, and user interface design to enhance the overall system quality.

System design is an iterative process that involves constant refinement and improvement. It requires collaboration among different stakeholders, such as software architects, developers, and project managers. Documentation, diagrams, and models, such as UML (Unified Modeling Language), are often used to visualize and communicate the system design.

Understanding Vertical Scaling and Horizontal Scaling

Vertical Scaling

Vertical scaling, also known as scaling up, refers to increasing the capacity of a single server or system component to handle higher workloads. It involves upgrading the existing hardware, such as adding more CPU power, increasing memory, or expanding storage capacity, to enhance the performance and capacity of the system. In vertical scaling, the system's resources are concentrated on a single machine, allowing it to handle more demanding tasks.

Horizontal Scaling

Horizontal scaling, also known as scaling out, involves adding more servers or system components to distribute the workload across multiple machines. Instead of increasing the capacity of a single server, horizontal scaling focuses on adding more machines to handle the growing workload. Each machine operates independently but works together as a cluster or a networked system. This approach allows for increased scalability and fault tolerance as the workload is divided among multiple machines

Comparison

Vertical scaling is suitable when there is a need to handle an increased workload on a single machine without the complexity of managing multiple servers. It can be useful for applications with a smaller user base or when the workload is predictable and can be managed by a single powerful server. However, vertical scaling has limitations as there is a maximum limit to the capacity of a single server, and it can become a single point of failure.

Horizontal scaling, on the other hand, offers better scalability and fault tolerance. By adding more servers to the system, horizontal scaling allows for better distribution of the workload, improved performance, and the ability to handle higher traffic or increased processing demands. It also provides redundancy, as the failure of one server does not impact the entire system. However, horizontal scaling can introduce complexities related to load balancing, data synchronization, and inter-server communication.

In summary, vertical scaling involves upgrading a single server's resources to handle an increased workload, while horizontal scaling involves adding more servers to distribute the workload. The choice between vertical and horizontal scaling depends on factors such as the application's requirements, scalability needs, budget, and desired level of fault tolerance.

Load Balancer

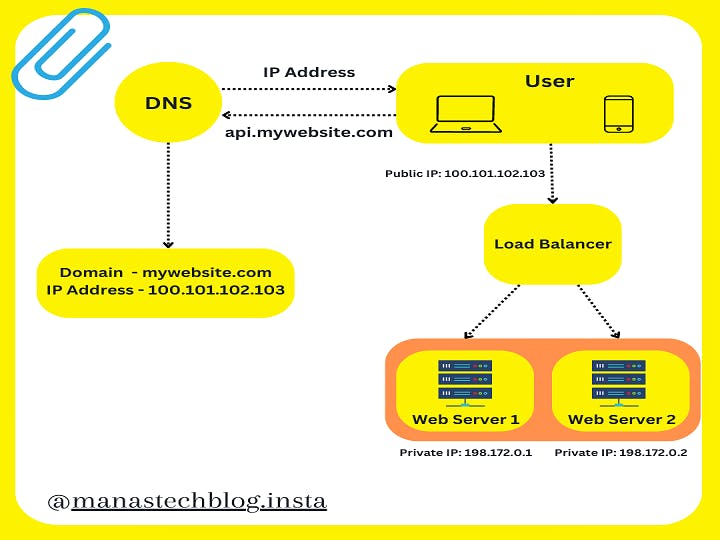

A load balancer evenly distributes the incoming traffic among web servers that are defined in a load-balanced set.

A load balancer is a crucial component in modern computer systems and network architecture. It acts as a mediator between clients (such as web browsers or applications) and a group of backend servers, distributing incoming network traffic across these servers in an efficient and balanced manner. The primary purpose of a load balancer is to optimize resource utilization, improve performance, and ensure high availability of services.

Here's a detailed explanation of how a load balancer works and its key functionalities:

Traffic Distribution: When a client sends a request to access a particular service or application, it first reaches the load balancer. The load balancer examines the incoming request and determines which backend server is best suited to handle it. This decision is made based on various factors, such as server health, current server load, and predefined algorithms or rules.

Load Balancing Algorithms: Load balancers utilize different algorithms to distribute traffic effectively. Common algorithms include:

Round Robin: Traffic is distributed equally among the backend servers in a circular manner.

Least Connections: Traffic is sent to the server with the fewest active connections, aiming to balance the load across all servers.

IP Hash: Distribution is based on the client's IP address, ensuring that requests from the same client are always routed to the same server.

Health Monitoring: Load balancers constantly monitor the health and performance of backend servers. They regularly send health checks (such as HTTP requests) to verify if servers are responsive and able to handle requests. If a server fails to respond or becomes overloaded, the load balancer automatically removes it from the pool of active servers, ensuring that traffic is not routed to the unhealthy server.

Session Persistence: Some applications require maintaining a consistent session for a particular user across multiple requests. Load balancers can be configured to implement session persistence, ensuring that all requests from a specific client are directed to the same backend server. This helps maintain session data and prevents disruptions in user experience.

Scalability and High Availability: Load balancers play a crucial role in scaling system resources horizontally. As traffic increases, additional servers can be added to the backend server pool, and the load balancer automatically distributes the load across the new servers. This allows for better resource utilization, improved performance, and the ability to handle higher traffic demands. Load balancers also provide high availability by detecting server failures and rerouting traffic to healthy servers, minimizing downtime and ensuring a seamless user experience.

SSL Termination and Encryption: Load balancers can handle SSL/TLS encryption and decryption, relieving backend servers from the computational burden of handling encryption processes. This improves the overall performance of the system while maintaining secure communication between clients and servers.

In summary, load balancers play a critical role in distributing network traffic, optimizing resource utilization, ensuring high availability, and improving overall system performance. By efficiently balancing the load across multiple backend servers, load balancers enhance scalability, fault tolerance, and user experience in modern computer systems.

Summarizing Up

Load balancers distribute incoming network traffic across multiple servers, optimizing resource utilization and improving performance. They act as a mediator between clients and backend servers, ensuring efficient traffic distribution based on predefined algorithms. Load balancers monitor server health, remove unhealthy servers, and enable scalability by adding more servers to handle increased workload. Scaling can be achieved through vertical scaling (increasing capacity of a single server) or horizontal scaling (adding more servers). Load balancers play a crucial role in maintaining high availability, session persistence, and SSL termination, enhancing system performance and ensuring a seamless user experience.