A rate limiter is a common component in system design that helps control the rate at which certain operations or requests are allowed to occur. It acts as a mechanism to prevent overloading a system by limiting the number of requests or actions processed within a specified time period.

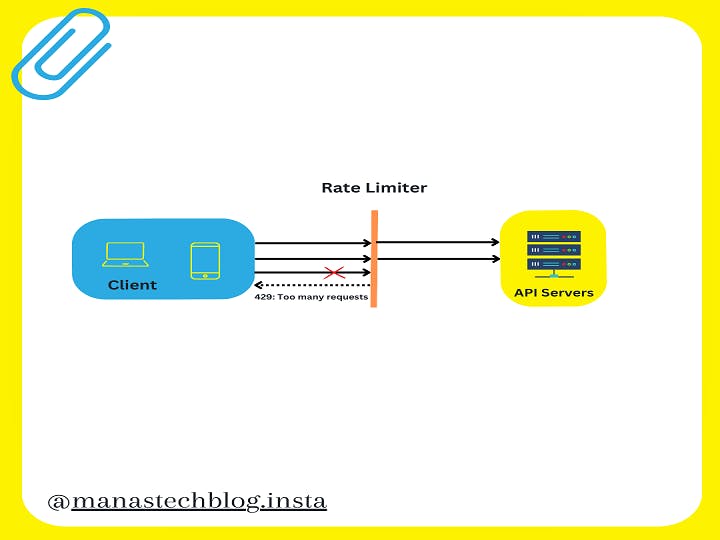

A rate limiter is employed to regulate the flow of traffic originating from a client or service. Within the realm of HTTP, this mechanism restricts the number of client requests permitted within a designated timeframe. If the count of API requests surpasses the threshold set by the rate limiter, any surplus calls are obstructed.

Benefits Of Using A Rate Limiter

Using a rate limiter in a system design offers several benefits:

Prevents Overload: A rate limiter helps prevent system overload by limiting the rate at which requests or actions are processed. By controlling the flow of incoming traffic, it ensures that the system resources are not overwhelmed, thereby maintaining system stability and performance.

Protects Against DoS Attacks: Rate limiters are effective in mitigating Distributed Denial of Service (DDoS) attacks. By restricting the number of requests from a single source or IP address, it becomes difficult for attackers to flood the system with excessive traffic, minimizing the impact of such attacks.

Ensures Fair Resource Allocation: Rate limiting ensures fair allocation of system resources among users or clients. It prevents a few users from monopolizing the resources, allowing others to access the system and maintain a reasonable level of service.

Improves Scalability: By controlling the rate of incoming requests, rate limiters facilitate system scalability. They allow the system to handle a larger number of users or clients without being overwhelmed, ensuring that the system can accommodate increased load without sacrificing performance.

Enhances Stability and Availability: By preventing sudden spikes in traffic, rate limiters help maintain system stability and availability. They prevent cascading failures and performance degradation by smoothing out the traffic flow and ensuring that the system remains responsive and accessible to users.

Protects Third-Party Integrations: Rate limiters are useful when integrating with third-party APIs or services. They prevent excessive requests to external systems, reducing the risk of being blocked or encountering rate limit errors. This helps maintain a smooth and reliable integration with external services.

Enables Usage-Based Billing: Rate limiters can be used to enforce usage-based billing models. By limiting the number of requests or actions allowed within a given time frame, service providers can accurately track and charge customers based on their actual usage, ensuring fair and transparent billing practices.

Points For Focus To Design A Rate Limiter

When designing a rate limiter, it is important to consider the following focus points:

Throughput and Performance: The rate limiter should be designed to handle the expected throughput and perform efficiently even under high load conditions. It should be able to process incoming requests or actions without introducing significant latency or performance degradation.

Scalability: The rate limiter should be designed to scale horizontally as the system load increases. It should be able to handle a growing number of users or clients and distribute the load across multiple instances or nodes if necessary.

Granularity: The rate limiter should provide options for defining the level of granularity for rate limiting. This could include limiting requests per second, per minute, per user, per IP address, or based on any other relevant criteria specific to the system.

Configuration and Flexibility: The rate limiter design should allow for easy configuration and adjustment of parameters to adapt to changing needs. It should provide flexibility in setting rate limit thresholds, selecting rate limiting algorithms, and defining any specific rules or exceptions required.

Burst Handling: The rate limiter should be able to handle bursts of traffic effectively. It should allow for temporary spikes in request rates without blocking legitimate requests or causing unnecessary service interruptions. This can be achieved by employing algorithms that account for burst handling, such as allowing a certain number of requests to exceed the rate limit within a short period.

Error Handling and Reporting: The rate limiter should provide clear and meaningful error messages or status codes to users or clients when their requests are rejected due to rate limiting. It should also log relevant information for monitoring and troubleshooting purposes.

Distributed Systems Considerations: In distributed systems, the rate limiter design should account for the coordination and synchronization between multiple instances or nodes. It should ensure consistent rate limiting enforcement across the distributed components and avoid potential race conditions or conflicts.

Graceful Degradation: The rate limiter should handle situations where it becomes unavailable or experiences failures gracefully. It should have fallback mechanisms or default behaviors in place to prevent total service disruption and allow the system to operate in a degraded mode if necessary.

Security Considerations: The rate limiter should account for security requirements such as preventing abusive or malicious behavior. It should be able to identify and block requests from suspicious sources, detect patterns of attacks and provide protection against DoS or DDoS attacks.

Monitoring and Metrics: The rate limiter should provide mechanisms for monitoring and collecting relevant metrics to gain insights into the system's usage patterns, rate limiting effectiveness, and potential areas for optimization. This data can be valuable for capacity planning, performance analysis, and continuous improvement.

High Level Designing Of A Rate Limiter

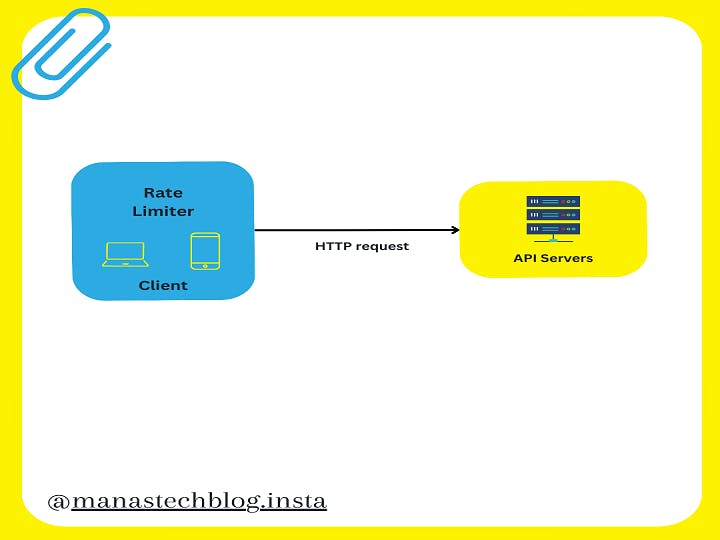

Client Side Rate Limiter

Advantages Of Client Side Rate Limiter

Flexibility and User Experience: Placing the rate limiter on the client side gives more control to the end user or client application. It allows clients to implement custom rate limiting logic based on their specific needs and constraints. This flexibility can be beneficial in scenarios where different clients have varying requirements or when rate limiting rules need to be tailored to specific client applications.

Offline Capabilities: Client-side rate limiting can be advantageous in scenarios where the client application needs to function offline or in limited connectivity situations. By enforcing rate limits locally, the client can continue to operate within the defined constraints even when it is disconnected from the server.

Reduced Server Load: Implementing rate limiting on the client side can help reduce the load on the server. By limiting the rate of requests before they are sent to the server, unnecessary requests that would be rejected anyway can be avoided. This can help optimize server resources and improve overall system scalability.

Disadvantages Of Client Side Rate Limiter

Lack of Uniform Enforcement: Client-side rate limiting relies on individual clients to enforce rate limits. This decentralized approach can result in inconsistencies if different clients implement rate limiting differently or fail to enforce it correctly. It becomes challenging to ensure consistent and uniform rate limiting across all clients.

Susceptibility to Manipulation: Since client-side rate limiting is under the control of the client application, it is vulnerable to manipulation or bypassing by malicious users. Clever attackers may attempt to modify or disable the rate limiting logic on the client-side, allowing them to exceed the defined limits and potentially disrupt the system.

Increased Development Complexity: Implementing rate limiting on the client side requires additional development effort for each client application. It can add complexity to the client-side codebase and may require updates and maintenance whenever rate limiting policies or requirements change.

Limited Visibility and Monitoring: With client-side rate limiting, it can be challenging to gain comprehensive visibility into the overall system usage and enforce monitoring and analytics. Aggregating data from multiple clients can be difficult, making it harder to identify usage patterns, troubleshoot issues, or optimize system performance.

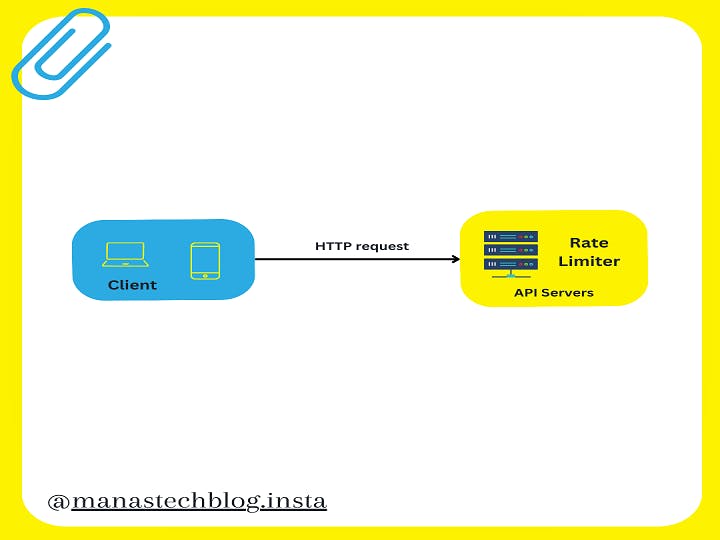

Server Side Rate Limiter

Advantages Of Server Side Rate Limiter

Centralized Control: Placing the rate limiter on the server side provides centralized control over rate limiting policies and enforcement. This allows for consistent rate limiting across all clients and ensures that the enforcement rules are applied uniformly.

Security and Protection: Server-side rate limiting provides better protection against abusive or malicious behavior. By controlling the rate of incoming requests at the server level, it becomes more difficult for attackers to bypass or manipulate rate limiting mechanisms implemented on the client side.

Aggregation and Analytics: Server-side rate limiting allows for better aggregation of usage data and analytics. By monitoring and analyzing the rate of requests at the server level, valuable insights can be gained about system usage patterns, potential bottlenecks, and areas for optimization.

Enforcing System-Wide Limits: Server-side rate limiting is essential when enforcing system-wide rate limits or limits that are based on shared resources. It ensures that all clients adhere to the defined limits, preventing any single client from monopolizing resources or causing performance degradation for others.

Disadvantages Of Server Side Rate Limiter

Increased Server Load: Placing the rate limiter on the server side can introduce additional load on the server, especially when dealing with a high number of incoming requests. The server needs to perform the rate limiting checks for each request, potentially impacting overall server performance and scalability.

Dependency on Network Connectivity: Server-side rate limiting relies on the availability of network connectivity between the client and server. If there are intermittent or unstable network connections, the rate limiting enforcement may not be consistently applied. This dependency can impact the reliability of rate limiting in such scenarios.

Potential Single Point of Failure: Implementing rate limiting on the server side introduces a potential single point of failure. If the rate limiter or the server itself experiences downtime or failures, it can disrupt the entire rate limiting mechanism, impacting all clients relying on the server-side enforcement.

Increased Response Time: Server-side rate limiting introduces additional processing time for each request, as the server needs to perform rate limiting checks. This can slightly increase the response time for requests, especially during periods of high load or when complex rate limiting algorithms are employed.

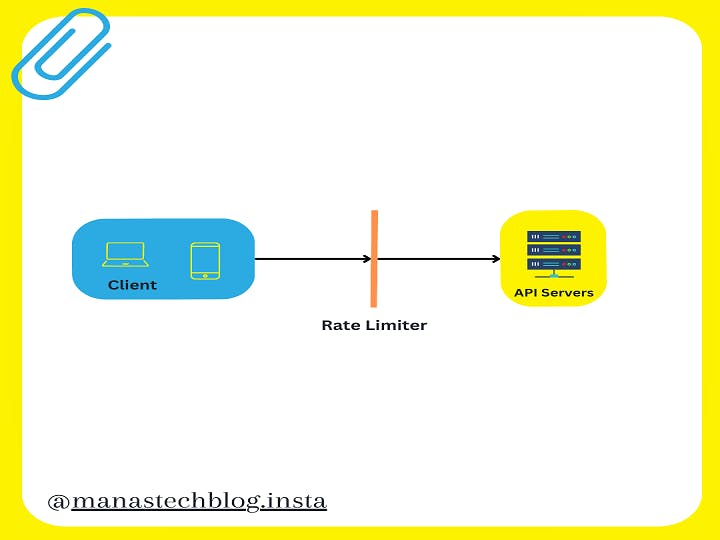

Rate Limiter As A Middleware

Rate Limiter as a middleware plays a vital role in system design by controlling and regulating the rate of incoming requests or actions within the middleware layer of an application. It acts as an intermediary between the client and the server, effectively managing the flow of traffic and preventing system overload.

When implemented as a middleware component, the Rate Limiter intercepts incoming requests before they reach the core application logic. It applies predefined rules and policies to determine whether the request should be allowed or blocked based on the defined rate limits.

Here is a detailed explanation of the Rate Limiter as a middleware:

Placement in the Middleware Layer: The Rate Limiter is positioned within the middleware layer of an application, between the client and the core application logic. This allows it to intercept incoming requests and apply rate limiting rules before they reach the main processing components.

Intercepting Incoming Requests: As requests flow through the middleware pipeline, the Rate Limiter middleware intercepts each incoming request, typically at the entry point of the application. It examines the request details to determine if it complies with the defined rate limits and policies.

Rate Limiting Policies: The Rate Limiter middleware applies a set of predefined rate limiting policies that specify the allowed rate of requests per unit of time. These policies may be based on criteria such as the client's IP address, user identity, request type, or any other relevant attributes.

Enforcing Rate Limits: Based on the defined policies, the Rate Limiter middleware compares the incoming request rate against the specified thresholds. If the rate of requests exceeds the allowed limit within the defined time frame, the middleware takes appropriate action to enforce the rate limit.

Handling Exceeding Requests: When the rate of incoming requests exceeds the defined limit, the Rate Limiter middleware can take different actions depending on the system requirements. It may block or reject the exceeding requests, respond with an error message or status code, or implement a queuing mechanism to hold excess requests for processing later.

Granularity and Configuration: The Rate Limiter middleware provides flexibility in setting the level of granularity for rate limiting. It allows configuring different rate limits based on various criteria such as per client, per user, per API endpoint, or any other relevant attributes. The configuration options enable fine-tuning the rate limiting rules to align with the specific needs of the application.

Integration with Monitoring and Logging: The Rate Limiter middleware often integrates with monitoring and logging mechanisms to provide visibility into the rate limiting process. It can generate logs or metrics that capture information about the rate of requests, rejected requests, or any other relevant data. This information is valuable for analyzing system usage patterns, identifying potential issues, and optimizing the rate limiting strategy.

Scalability and Performance Considerations: As a middleware component, the Rate Limiter needs to be designed with scalability and performance in mind. It should be capable of efficiently handling a high volume of requests and enforcing rate limits without introducing significant latency or bottlenecks.

By incorporating Rate Limiter as a middleware, system designers can achieve effective control over the flow of requests, ensuring system stability, protecting against abuse or overload, and providing a reliable and consistent user experience. The middleware approach allows for flexibility, easy integration within the application architecture, and central control over rate limiting policies.

Summarizing Up

In summary, rate limiters offer a range of benefits including preventing overload, protecting against attacks, ensuring fair resource allocation, improving scalability, enhancing stability and availability, safeguarding third-party integrations, and enabling usage-based billing. These benefits contribute to the overall reliability, performance, and security of a system.

In the next part, we are going to see some Rate Limiter working algorithms.